The digital world is awash in data. From the proliferation of smartphones and smart devices to the deployment of complex Industrial Internet of Things (IIoT) sensors, the volume and velocity of data generated at the periphery of the network are staggering. For years, the dominant model for handling this deluge has been cloud computing, which centralizes processing power in remote data centers.

While the cloud offers immense scalability and flexibility, it faces inherent limitations when dealing with time-sensitive data and high-bandwidth requirements. Enter Edge Computing, a revolutionary paradigm shift that brings computation and data storage closer to the source of data generation—the “edge” of the network. This move is not merely an optimization; it’s a fundamental re-architecture of how we compute, promising lower latency, enhanced security, and a new era of intelligent, real-time applications.

Defining the Edge: Beyond the Data Center

At its core, edge computing is a distributed computing paradigm. Instead of sending all raw data to a distant cloud server for processing, it leverages intermediate, local resources. The “edge” isn’t a single defined location; it’s a spectrum of computational resources located between the central data center and the end-user or device.

-

Device Edge (or Micro-Edge): This includes the compute capabilities embedded directly within the end-user devices themselves, such as smartphones, smart cameras, autonomous vehicle sensors, or factory floor PLCs.

-

Near Edge (or Fog Computing): These are small-scale data centers or server racks located in intermediate aggregation points like cell towers, regional offices, or on-premises gateways. These locations process data from many connected devices before sending aggregated, relevant insights to the cloud.

The underlying motivation for this distributed approach is to solve the critical challenges posed by the cloud-centric model, primarily latency and bandwidth. Imagine an autonomous car needing to decide whether to brake in milliseconds; waiting for data to travel hundreds of miles to the cloud and back is simply not feasible. Edge computing ensures that critical decisions can be made locally, instantly, and reliably.

The Imperative for Low Latency and High Bandwidth

The true power of the edge is unlocked in scenarios where immediate action is required. This is where latency, the delay before a transfer of data begins following an instruction, becomes a deal-breaker.

-

Autonomous Systems: Self-driving cars, drones, and robots require near-instantaneous processing of sensor data (Lidar, cameras) to navigate and avoid collisions. Latency must be in the single-digit milliseconds—a requirement the centralized cloud often cannot meet.

-

Real-Time Monitoring and Control: In smart factories and industrial settings (IIoT), the real-time monitoring of machinery for predictive maintenance or emergency shutdown requires immediate analysis of sensor data. A delay could result in millions of dollars in downtime or a safety hazard.

-

Enhanced User Experience: For interactive augmented and virtual reality (AR/VR) applications, the slight delay from cloud processing can cause motion sickness or break the sense of presence. Processing the complex graphics and tracking data at the edge ensures a seamless experience.

Furthermore, sending the sheer volume of raw data generated by thousands of sensors to the cloud can overwhelm network capacity and become prohibitively expensive. Edge devices can perform pre-processing, filtering, and aggregation of data, sending only the most relevant and compressed insights to the central cloud. This conserves bandwidth and reduces the overall computational load on the core network.

🛡️ Security, Privacy, and Regulatory Compliance

While the move to distributed processing might seem to introduce more potential attack vectors, edge computing can significantly enhance both security and privacy.

-

Reduced Data Transit: By processing data locally, sensitive information does not need to traverse long distances across the public internet to a remote cloud. This reduces the opportunities for interception or compromise during transmission.

-

Data Sovereignty: Many industries, particularly healthcare and finance, are subject to stringent regulations regarding data storage and location (e.g., GDPR, HIPAA). Edge computing allows organizations to ensure that sensitive data remains within a specific geographic or local jurisdiction, aiding in compliance with data sovereignty laws.

-

Isolated Operations: An edge deployment can be configured to operate even if the connection to the main cloud is temporarily lost. This is crucial for mission-critical applications, such as remote surgical equipment or utility grid management, ensuring business continuity and reliable operation (often referred to as resilience).

The security strategy at the edge focuses on robust authentication and encryption right at the device level, treating every edge node as a potentially vulnerable yet highly secured micro-data center.

Key Applications Driving Edge Adoption

The rapid adoption of edge computing is fueled by transformative applications across multiple sectors.

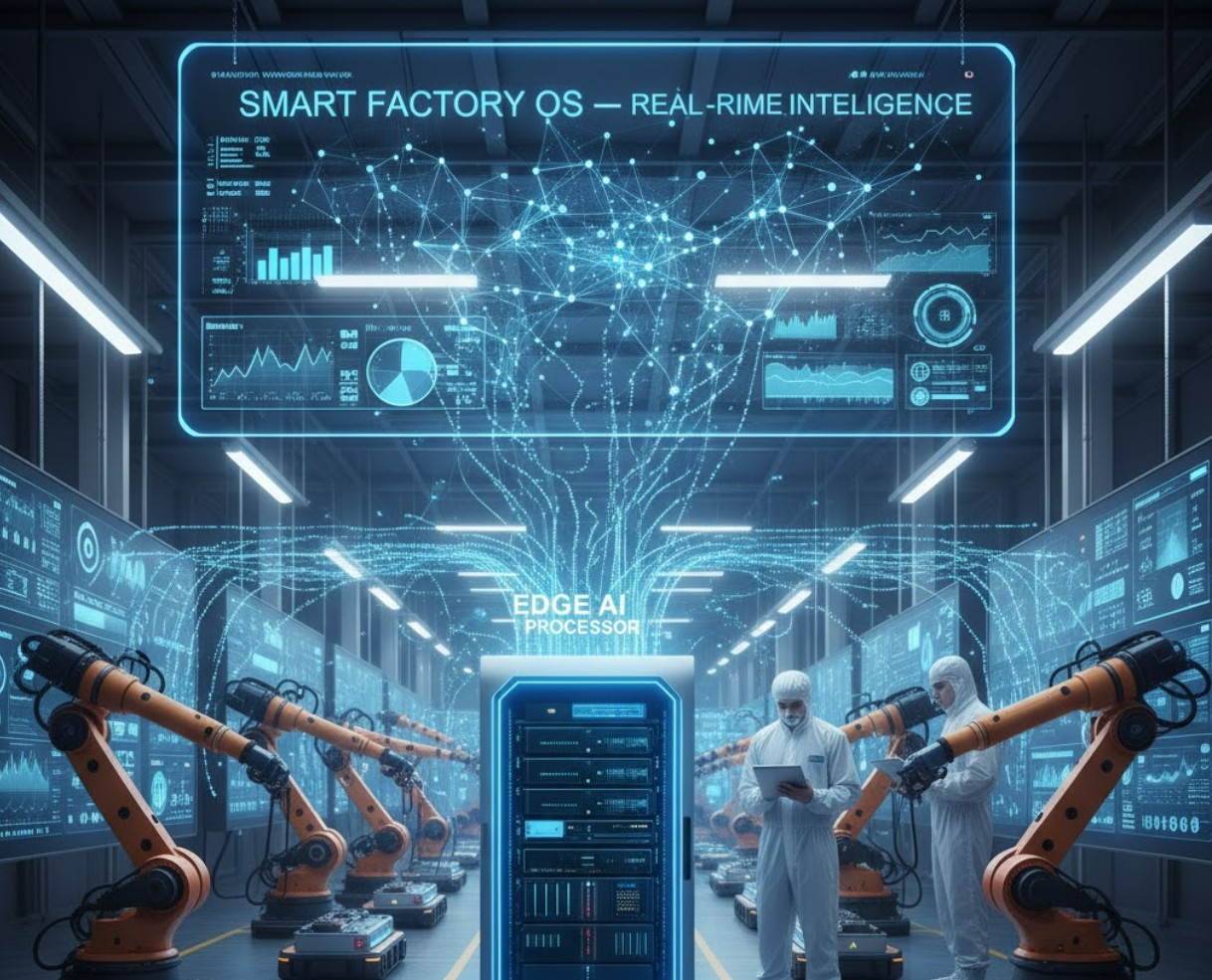

🏭 Industrial IoT and Smart Manufacturing

Edge computing is the backbone of Industry 4.0. By deploying compute resources on the factory floor, manufacturers can perform real-time machine vision for quality control, monitor equipment vibration and temperature for predictive maintenance, and optimize robotic assembly lines. The ability to detect an anomaly and prevent a failure in milliseconds directly translates to significant cost savings and improved throughput.

🌐 Smart Cities and Infrastructure

In a smart city, the edge is everywhere: traffic cameras, smart streetlights, public transportation sensors. Edge processing enables immediate analysis of traffic flow to dynamically adjust signal timing, real-time monitoring of air quality, and rapid detection of public safety incidents. This local intelligence makes city infrastructure more responsive and efficient.

🩺 Healthcare

The rise of remote patient monitoring and connected medical devices demands the edge. Wearable biosensors generate continuous data streams that need immediate analysis for critical alerts. Processing this data near the patient ensures that a life-threatening change is detected and flagged instantly, without the delay of cloud transit.

retail Retail and Customer Experience

Retailers use edge computing for real-time inventory tracking, personalized digital signage, and self-checkout systems. Edge AI models can instantly analyze customer behavior in-store to optimize product placement or detect theft, all while maintaining the speed required for a smooth customer transaction.

The Symbiotic Future: Cloud and Edge

It is a common misconception that edge computing seeks to replace the cloud. In reality, the future is not edge or cloud; it is edge and cloud. They form a powerful, complementary, symbiotic relationship.

-

The Edge: Handles real-time, high-speed, local processing, data filtering, and immediate decision-making. It acts as the frontline of the computing infrastructure.

-

The Cloud: Continues to handle long-term storage, batch processing, deep analytics, model training, and global coordination. The less-frequent, strategic insights aggregated by the edge are sent to the cloud to train better machine learning models, which are then pushed back out to the edge for real-time inference—a continuous feedback loop known as the Edge-Cloud continuum.

This cooperative model allows organizations to leverage the best features of both architectures: the agility and speed of the edge combined with the massive scalability and deep analytical capabilities of the cloud.

Navigating the Challenges of the Edge

While the potential of edge computing is transformative, its adoption is not without challenges.

-

Orchestration and Management: Managing thousands of distributed, heterogeneous edge devices—each with different hardware, software, and connectivity—is incredibly complex. New tools and platforms are required to provision, update, and secure these remote nodes at scale.

-

Hardware Standardization: The edge exists across a diverse range of hardware, from tiny microcontrollers to powerful local servers. Developing and deploying applications that run seamlessly across this varied landscape requires robust containerization and standardization.

-

Physical Environment: Edge nodes are often deployed in challenging, non-data center environments (factory floors, cell towers, remote vehicles) where they must contend with extreme temperatures, vibration, and limited physical security.

Overcoming these hurdles requires a shift in mindset and the development of new, unified operating systems and management frameworks designed specifically for distributed, resource-constrained environments.

Conclusion: The Intelligent Frontier

Edge computing represents the next great evolution in distributed intelligence. By pushing processing power closer to the data source, it is fundamentally changing what is possible in areas ranging from life-saving medical devices to fully autonomous industrial operations.

The move to the edge is an acknowledgement that the sheer volume of data and the need for immediate, contextual intelligence can no longer be served by a purely centralized model. The edge is not just a technological trend; it is the intelligent frontier of the digital world, empowering devices and systems to act faster, operate more reliably, and unlock a new generation of real-time, data-driven innovation. For enterprises looking to maintain a competitive advantage in the coming decade, embracing the power of the edge is no longer optional—it is essential for survival and success.