We are currently witnessing the end of the “Wild West” era of Artificial Intelligence. For years, the mantra of Silicon Valley was “move fast and break things.” In the context of AI, however, we have realized that what we might break is not just a taxi industry or a hotel chain, but fundamental democratic processes, individual privacy, and social trust.

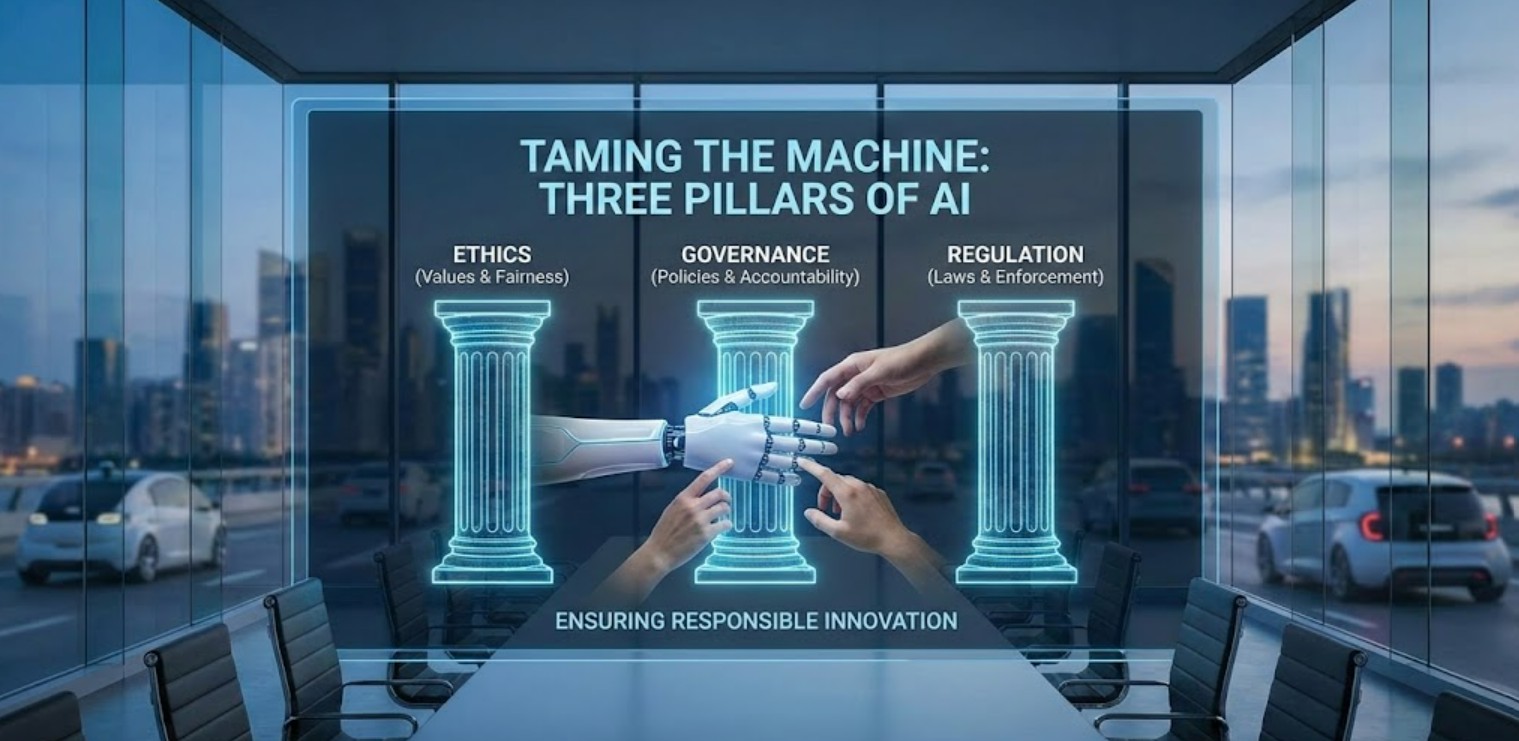

As AI systems transition from experimental curiosities to critical infrastructure—powering everything from hiring decisions to medical diagnoses—the conversation has shifted from capability to responsibility. To understand this shift, we must look at the three distinct but interconnected pillars that support the responsible development of AI: Ethics, Governance, and Regulation.

I. AI Ethics: The Moral Compass (The “Why”)

Ethics is the foundation. It is the philosophical “north star” that guides what we should do, rather than just what we can do. AI ethics is not about complying with a law; it is about alignment with human values.

The Black Box Problem

One of the central ethical challenges is transparency. Modern deep learning models, particularly Large Language Models (LLMs), operate as “black boxes.” We feed them data, and they produce an answer, but the internal logic—the specific path of neurons firing—is often indecipherable even to the engineers who built them.

Ethically, this creates the “Right to Explanation” problem. If an AI denies a loan application or a parole request, does the affected human have a moral right to know why? Ethical frameworks argue yes, necessitating the development of Explainable AI (XAI).

The Bias Crisis

AI is a mirror reflecting the data it was trained on. If that data contains historical prejudices, the AI will not only learn them but amplify them.

-

Historical Bias: If a hiring algorithm is trained on ten years of resumes from a male-dominated industry, it may downgrade resumes containing the word “women’s” (e.g., “women’s chess club captain”).

-

Sampling Bias: If a facial recognition system is trained primarily on light-skinned faces, it will have higher error rates for darker-skinned individuals, leading to potentially disastrous consequences in law enforcement.

Core Ethical Principles: Most global frameworks (like those from the OECD or UNESCO) converge on five key principles:

-

Beneficence: AI should do good and benefit humanity.

-

Non-maleficence: AI should do no harm (safety and security).

-

Autonomy: AI should preserve human agency, not manipulate it.

-

Justice: AI should be fair and non-discriminatory.

-

Explicability: AI decision-making should be transparent.

II. AI Governance: From Theory to Practice (The “How”)

If Ethics is the philosophy, Governance is the bureaucracy—in the best sense of the word. Governance refers to the internal policies, structures, and processes an organization implements to ensure their AI adheres to ethical principles and legal requirements.

Governance answers the question: Who is responsible when the AI makes a mistake?

The AI Lifecycle Approach

Effective governance is not a one-time check; it is a continuous cycle.

-

Design Phase: Defining the purpose of the AI. Is this use case ethical? (e.g., Should we build an emotional manipulation bot for children? Governance says no.)

-

Data Curation: Auditing datasets for bias and copyright clearance before training begins.

-

Development & Training: Using techniques like Reinforcement Learning from Human Feedback (RLHF) to align the model’s behavior with safety guidelines.

-

Deployment & Monitoring: AI models suffer from “drift”—their performance can degrade or change over time. Governance requires continuous monitoring to ensure the model doesn’t become toxic or inaccurate after release.

Red Teaming and Risk Management

A critical tool in modern governance is Red Teaming. This involves a group of ethical hackers and domain experts attacking the AI model to find vulnerabilities. They try to trick the AI into generating hate speech, building a bomb, or leaking private data.

Organizations are increasingly adopting the NIST AI Risk Management Framework (RMF), which provides a structured way to Map, Measure, Manage, and Govern AI risks. It moves companies away from vague promises to concrete documentation.

III. AI Regulation: The Force of Law (The “Must”)

Regulation is the external enforcement mechanism. While ethics are voluntary and governance is self-imposed, regulation is mandatory. We are currently seeing a global fragmentation in how governments approach this.

The European Union: The “Gold Standard”

The EU AI Act is the world’s first comprehensive AI law. It entered into force in mid-2024, with full implementation rolling out through 2026 and 2027. It adopts a Risk-Based Approach:

-

Unacceptable Risk (Banned): These systems are prohibited entirely. Examples include social scoring systems (like those seen in China) and real-time remote biometric identification (facial recognition) in public spaces by police (with narrow exceptions).

-

High Risk (Strictly Regulated): AI used in critical infrastructure, education, employment, or law enforcement. These require rigorous conformity assessments, high-quality data governance, and human oversight.

-

Limited Risk (Transparency): Chatbots and deepfakes. The user must be informed they are interacting with a machine.

-

Minimal Risk: Spam filters, video games. No new obligations.

The “Brussels Effect” suggests that because the EU market is so large, multinational tech giants will likely adopt EU standards globally to avoid maintaining two separate systems.

The United States: Innovation First

The US approach is more decentralized and market-driven. There is no single “AI Law.” Instead, the US relies on a patchwork of:

-

Executive Orders: Such as the Oct 2023 Order on “Safe, Secure, and Trustworthy AI,” which focuses on national security and requires companies to report safety test results for the most powerful models.

-

Agency Action: Existing regulators (FTC, EEOC) using existing laws to police AI (e.g., the FTC declaring that “there is no AI exemption to the laws on the books” regarding fraud).

China: State Control

China has moved quickly with specific regulations targeting algorithms and content. Their regulations focus heavily on Generative AI, requiring that model outputs be accurate and aligned with “socialist core values.” This creates a tight control environment where political stability is prioritized alongside technological advancement.

IV. The Future Challenges: 2025 and Beyond

As we look toward the immediate future, the interplay of these three pillars faces new stress tests.

1. The Rise of “AI Agents” Until recently, AI was a chatbot—you talk, it answers. In 2025, we are moving toward Agentic AI—systems that can act. An AI agent can book a flight, negotiate a contract, or write code and execute it.

-

Governance Challenge: How do you govern a system that can take autonomous actions in the real world?

-

Legal Challenge: If an AI Agent negotiates a bad contract, is the contract voidable?

2. The Pacing Problem Law moves at the speed of bureaucracy; AI moves at the speed of Moore’s Law. By the time the EU AI Act is fully enforceable in 2026/2027, the technology it was written to regulate may be obsolete. Regulators are attempting to solve this with “Code of Conduct” approaches that can be updated faster than legislation.

3. Copyright and Data Rights The “raw material” of the AI revolution is human-created data. Artists, writers, and publishers are suing AI companies for training on their work without compensation. The resolution of these lawsuits will effectively become a form of judicial regulation that shapes the economics of the entire industry.

Conclusion

Trust is the currency of the AI economy. Without Ethics, we build monsters. Without Governance, we build loose cannons. Without Regulation, we build monopolies that ignore the public good.

The goal is not to stop the train, but to build the tracks and the brakes so that we can travel at high speeds safely. For business leaders and developers, the message is clear: Compliance is no longer just a legal checklist; it is a competitive advantage. In a world of synthetic media and uncertainty, the most valuable asset an AI company can offer is safety.