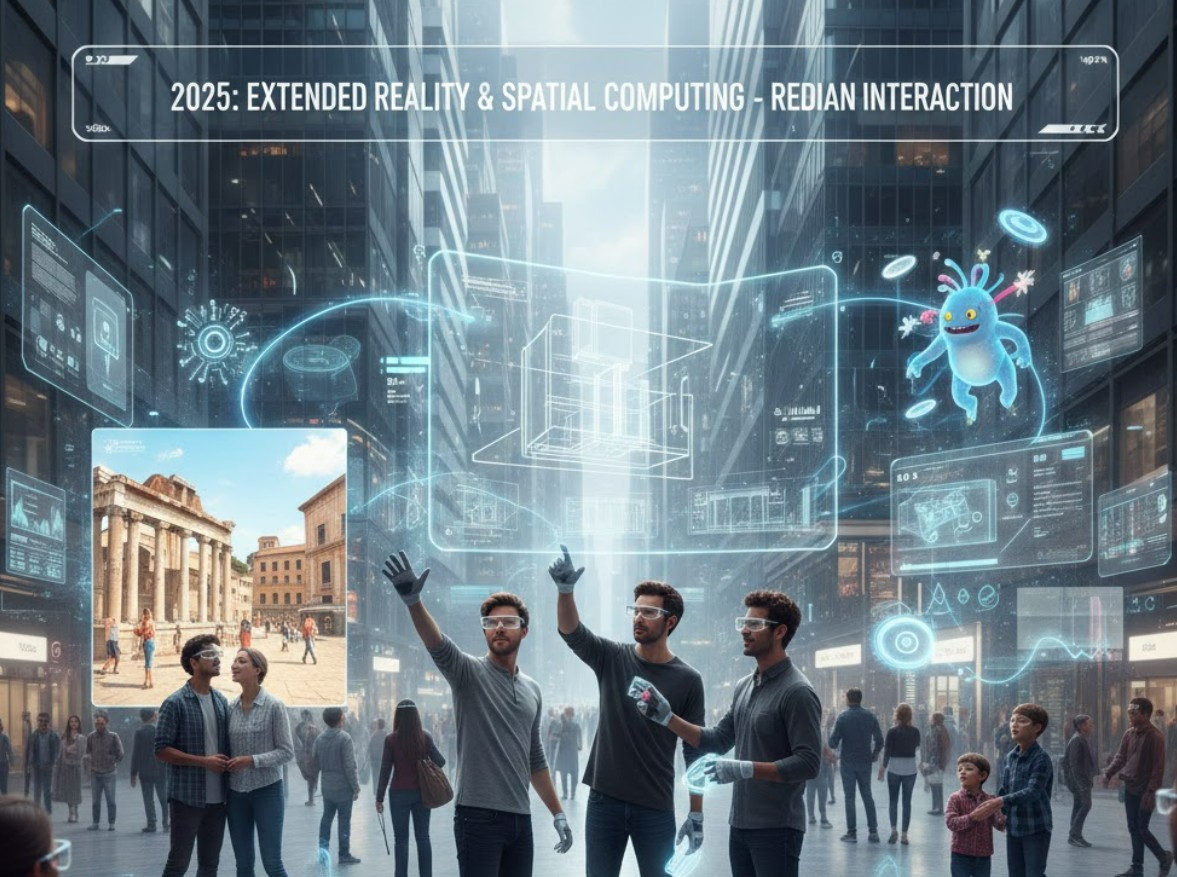

For decades, our relationship with digital content has been trapped behind glass. We peer into the digital world through the rectangles of smartphones, monitors, and televisions, acting as observers looking in. But as we move deeper into 2025, that paradigm is shattering. We are no longer just looking at computers; we are stepping inside them, and they are stepping out to meet us.

This is the era of Extended Reality (XR) and Spatial Computing—a technological convergence that is dissolving the barrier between atoms and bits, transforming the world itself into a canvas for digital information.

Defining the Terrain: The Vocabulary of Immersion

To understand the shift, we must first parse the terminology, which often suffers from marketing hype.

Extended Reality (XR) is the umbrella term for the spectrum of immersive technologies. It encompasses:

-

Virtual Reality (VR): Fully occluded experiences where the physical world is replaced by a digital one (e.g., gaming in a Meta Quest).

-

Augmented Reality (AR): Digital overlays on the real world, typically viewed through a transparent lens (e.g., smart glasses) or a phone screen.

-

Mixed Reality (MR): A sophisticated blend where digital objects not only overlay the real world but interact with it—bouncing off walls or hiding behind furniture.

Spatial Computing, however, is the broader philosophy and technical architecture that makes high-quality XR possible. It refers to the ability of devices to understand the physical world in three dimensions. It is not just about displaying an image; it is about the computer knowing that the image is sitting on a table, that the user is walking toward it, and that the light from the window should cast a shadow on it. If XR is the “what,” Spatial Computing is the “how.”

The 2025 Shift: Why Now?

Why has this technology, long promised but rarely delivered, suddenly reached an inflection point in the mid-2020s? The answer lies in the convergence of three critical technologies: Pass-through Optics, Semantic Understanding, and Generative AI.

1. The Pass-Through Standard

Early VR was isolating. Today, headsets like the Apple Vision Pro and Meta Quest 3 have normalized “pass-through” video—using high-resolution cameras to digitize the outside world and feed it to the user’s eyes with near-zero latency. This allows users to remain present in their physical environment while manipulating 3D apps, effectively turning VR headsets into powerful AR computers.

2. Semantic Understanding

Old AR devices saw the world as a mesh of geometry—they knew something was there, but not what it was. Modern spatial computers possess semantic understanding. They don’t just see a flat plane; they identify it as a “table.” They recognize a “door,” a “human,” or a “cat.” This context awareness allows apps to behave intelligently, such as a virtual pet that knows to jump onto the couch but avoid the coffee table.

3. The Generative AI Multiplier

Perhaps the biggest accelerant in 2025 is Generative AI. Historically, creating 3D assets for XR was slow and expensive. Now, GenAI allows users to summon 3D environments or objects simply by speaking. Furthermore, “Spatial AI” agents can now see what the user sees, offering real-time assistance—analyzing a broken engine part and highlighting the specific bolt that needs tightening.

Industry 4.0 and the Enterprise Revolution

While consumer adoption grows steadily, the enterprise sector has become the true proving ground for spatial computing.

Manufacturing and Digital Twins Factory floors are being revolutionized by “Digital Twins”—perfect virtual replicas of physical systems. In 2025, a plant manager doesn’t just look at a spreadsheet; they walk through a holographic projection of the assembly line. If a machine is overheating, it glows red in their AR view, displaying real-time sensor data floating above it. Companies like Siemens and NVIDIA have pioneered these industrial metaverses, allowing problems to be solved virtually before they cause physical downtime.

Healthcare: The X-Ray Vision Surgeons are using mixed reality overlays to gain “superpowers.” Instead of looking away at a monitor during a procedure, vital signs and MRI scans are projected directly onto the patient’s body in the surgeon’s field of view. Beyond surgery, VR is seeing massive success in pain management, where immersive environments are used to distract the brain from chronic pain signals, reducing reliance on opioids.

Training and Education The concept of “learning by doing” has been digitized. Walmart and Emirates Airlines have utilized VR to train thousands of employees in scenarios that would be impossible or too dangerous to replicate in real life—from managing Black Friday crowds to handling mid-flight emergencies. In classrooms, students are no longer just reading about the Roman Empire; they are standing in the Forum, holding virtual artifacts that obey the laws of physics.

The Hardware Divide: Headsets vs. Glasses

As of 2025, the market is split into two distinct form factors, each serving different needs.

On one side are the Spatial Computers (Headsets). These are powerful, face-worn devices (like the Vision Pro or high-end Quest models). They offer incredible fidelity and immersion but are still relatively heavy and socially obtrusive. They are the “workstations” of the spatial era—perfect for deep work, watching movies, or immersive design.

On the other side are Smart Glasses. Devices like the evolutions of the Ray-Ban Meta glasses have abandoned displays entirely in favor of AI audio assistants and cameras. They are lightweight, stylish, and socially acceptable. While they don’t yet project holograms, they serve as an “AI on your face,” whispering translations in your ear or answering questions about what you are looking at. The industry race is now focused on merging these two: shrinking the power of the headset into the form factor of glasses.

The Ethical and Privacy Minefield

With great immersion comes great risk. Spatial computing introduces privacy challenges that dwarf those of the smartphone era.

-

Biometric Intimacy: To work properly, spatial computers track where you look (eye tracking), how you move (gait analysis), and even your emotional state (pupil dilation). This data is a goldmine for advertisers but a nightmare for privacy advocates.

-

Spatial Mapping: These devices constantly scan your private spaces—your bedroom, your office, your living room—creating detailed 3D maps of your life. Who owns the digital map of your home?

-

The “Reality” Gap: As AI-generated content becomes indistinguishable from reality, the potential for “deepfakes” moves from video to real-time spatial encounters. Users may soon need digital verification to ensure the person sitting across from them in a virtual meeting is a real human, not an AI avatar.

The Future Outlook: The Invisible Interface

Looking toward 2030, the goal of spatial computing is to make the computer itself disappear. We are moving toward a world of “ambient computing,” where digital information is woven so seamlessly into the fabric of our lives that we stop noticing the device delivering it.

We will likely see a move away from “apps” as we know them—icons on a grid—toward context-aware “experiences” that appear only when needed. A recipe card that floats above your cutting board when you start cooking; a navigation arrow that appears on the sidewalk only when you are lost.

Conclusion

Extended Reality and Spatial Computing represent the most significant shift in human-computer interaction since the mouse and keyboard. They promise a future where technology is more intuitive, more immersive, and paradoxically, more human—freeing us from our screens to look up and interact with the world around us, enhanced rather than distracted.

As we navigate this transition in 2025, the challenge will not be the technology itself, but how we choose to govern it. We have the opportunity to build a spatial web that enriches our physical lives, provided we design it with privacy, agency, and humanity at its core.